Can we "talk" with the data? A tiny case for testing pandas_ai for human clearance data

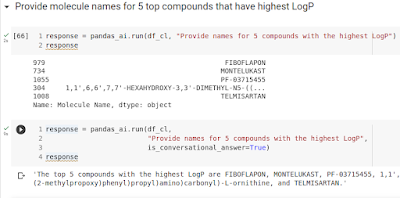

This is a test of the pandas_ai by package by Gabriele Venturi . PandasAI is a layer between the pandas data frame and LLMs that simplifies interaction with the data and makes it more conversational. I did a quick test for AstraZeneca clearance data from ChEMBL to test if pandas_ai will ease data manipulation. Google colab notebook is here . **TLDR** : the proposed solution could be more optimal, but it's working! Some issues with linking abstract things, but current LLMs do not have a defined knowledge graph, so there are no surprises. More packages will appear soon, that will ease interaction with private data. The algorithm behind the package provides real code for data frame filtering and manipulation. Because of this, it will fail if the data types of columns are not correctly defined. Simple request : Provide names for 5 compounds with the highest LogP. Answer : looks excellent, proper linking name to 'Molecule Name' and 'IDs' to 'Molecule ChEMBL ID&#